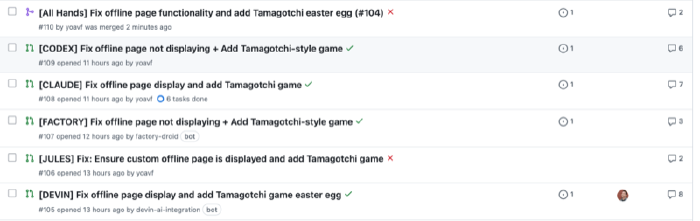

I threw an issue from a side project at 6 AI tools today: Claude Code, OpenAI Codex (the CLI version), Factory.ai, Devin, Jules, and All Hands.

The task was a bug fix + feature. The tricky part: it required setting the browser to offline mode for testing.

Results

None of the agents resolved the issue in one-shot, though eventually All Hands got the job done and its PR was merged.

Initially 5 out of 6 tools failed in exactly the same way. They all made the same wrong assumption about how a specific library config worked, which meant the underlying bug never got fixed. Most implemented the requested feature perfectly, but it was useless without the bug fix since they were interconnected.

- All Hands eventually got it done after a few iterations and me pointing it to a specific example online. Easiest to work with iteratively. I’m planning to also give Open Hands (the self-hosted version) a try.

- Devin felt the most polished and capable of the hosted agents, but it seems to also be the most expensive – I ran out of credits before I could iterate properly.

- Factory.ai – Might have solved it with more time (I maxed out the usage of my free trial), though this enterprise-ready tool is probably overkill for my little side project.

- Jules completely hallucinated the issue content and implemented something totally different (despite being connected to GitHub – not sure what happened there).

- Both Claude Code and Codex are pretty capable. Claude Code is what I’m mainly using now for agentic coding, but I’m planning to spend more time with Codex.

This isn’t a scientific review, obviously – just a quick experiment on real code. But the patterns were interesting enough to share.

Well, that was fun, but way too much time spent on an offline mode easter egg for an unreleased side project 🙂